Tại Google Cloud, Google cam kết cung cấp cho khách hàng sự lựa chọn hàng…

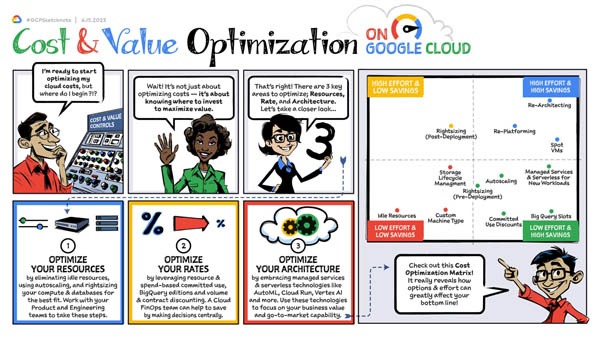

Cost and value optimization on Google Cloud

In today's ever-evolving landscape, while the benefits of the cloud are easy to realize, controlling costs for a cloud platform can be challenging by nature. The cloud platform from Google supports flexible cost control, but not everyone can optimize usage costs effectively. For that reason, businesses need to master how to cost optimization using Google Cloud to unlock valuable and effective business opportunities to drive organizations forward.

Why is it important to optimize the cost of using Google Cloud?

Google offers a range of native tools on the platform to help businesses track, manage, and optimize their spending. Google Cloud Platform. Google's native tools can manage deployments, alert you to infrastructure failures, and provide visibility into cost drivers. It even tells you when a VM instance is being underutilized so you can choose a more suitable configuration.

However, many businesses find it difficult to optimize their Google Cloud environment because things change so quickly in the cloud. So even though the environment Google Cloud may be in an optimized state after you have used all available tools to cost optimization using Google Cloud Platform, but the chances are that a week, month or quarter later, costs will once again rise to uncontrollable levels and you'll have to redo the optimization process.

What is the best way to optimize costs when using Google Cloud?

1. Learn invoicing and expense management tools

Due to the on-demand nature of cloud usage, costs are likely to add up if you don't monitor them closely. Once you understand your costs, you can start implementing measures to control and optimize your spending. To help with this, Google Cloud offers a powerful set of free invoicing and expense management tools that can give you the visibility and insights you need to keep up with your cloud deployment. mine.

For starters, your organization and cost structure match your business needs. Then, dive into the services that use the Billing reports feature to get a quick look at your expenses. You should also learn how to reallocate costs across departments or teams using labels and create your own custom dashboards to see expenses in more detail. You can also use quotas, budgets, and alerts to closely monitor your current expense trends and forecast them over time, to reduce the risk of budget increases.

2. Pay only for the resources you need

Now that you have detailed visibility into your cloud costs, it's time to reassess your most expensive projects to identify compute resources that aren't delivering true business value. .

Identify unused VMs (and drives):

The easiest way to reduce Google Cloud Platform (GCP) operating costs is to remove resources that are no longer being used. Think about proof-of-concept (POC) projects that have been stripped of their rights to use, or VMs that are forgotten when they are no longer needed and no one wants to delete them. Google Cloud provides several Recommenders that can help you optimize these resources, including an idle VM recommender that identifies idle virtual machines (VMs) and persistent disks based on usage metrics.

However, always be careful when deleting VMs. Before deleting a resource, ask yourself, “what is the potential impact of deleting this resource, and how can I recreate it if needed?” Deleting instances will remove the underlying disks and all its data. A best practice is to take a snapshot of the instance before deleting it. Alternatively, you can simply choose to stop the VM, which terminates the instance, but keeps resources like disks or IP addresses until you detach or delete them.

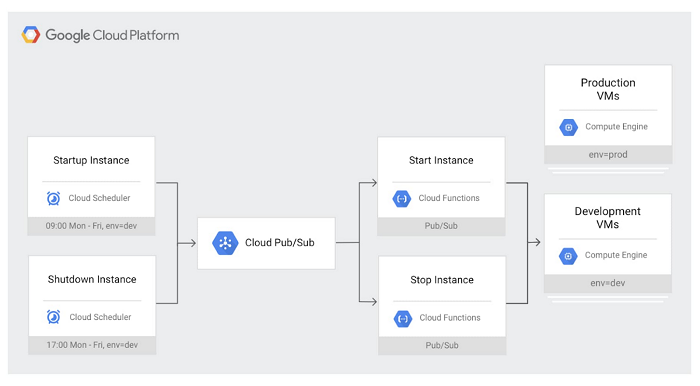

Schedule VMs to automatically start and stop:

The benefits of a platform like Compute Engine is that you only pay for the computing resources you use. Production systems tend to run 24/7; however, VMs in development, testing or personal environments tend to be used only during business hours and turning them off can save you a lot of money! For example, a VM running for 10 hours a day, Monday through Friday, costs 75% less to run per month than it would to run it all the way through.

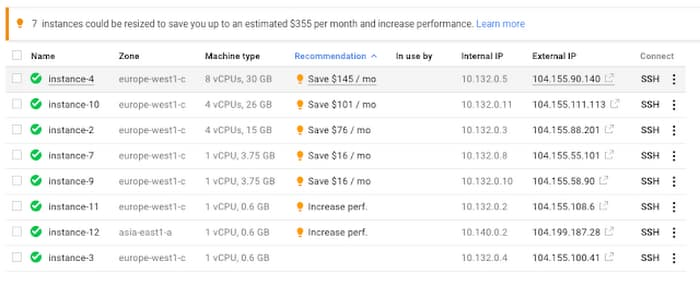

Rightsize VMs:

On Google Cloud, you can already make significant savings by creating a custom machine type with the right amount of CPU and RAM to meet your needs. But workload requirements can change over time. Versions that were previously optimized can now serve fewer users and traffic. For optimization, rightsizing recommendations can show you how to efficiently reduce machine type size based on changes in vCPU and RAM usage. This process is generated using system metrics collected by Cloud Monitoring over the previous 8 days.

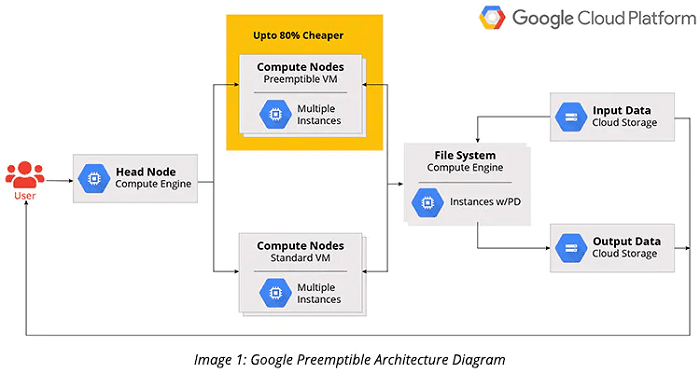

Take advantage of preemptible VMs:

Preemptible VMs are affordable desktop instances that last up to 24 hours and are up to 80% cheaper than regular instances. Preemptible VMs are suitable for workloads that can continue to function without interruption when one or more of its components fail. Examples include big data, genomics, media transcoding, financial modeling and simulation. You can also use a combination of regular and preemptible instances to complete compute-intensive workloads faster and cost-effectively, by setting up a dedicated managed instance pool.

Optimize Cloud Storage cost and performance

When you run a traditional data management system, storage space tends to be lost in the overall infrastructure cost, making it more difficult to properly manage costs. But in the cloud, where storage is billed as a separate category, and when you pay attention to usage and configuration of capacity can lead to significant cost savings.

And storage needs are always changing. The storage class you selected when you first set up the environment may no longer be suitable for certain workloads. Besides, Storage Storage has been used for a long time and now is providing a lot of new features that are unmatched compared to a year ago.

If you are looking to save memory, here are some parts to consider.

Storage classes:

Cloud Storage offers multiple storage layers — standard, nearline, coldline, and archival, all with different costs and their own best-suited use cases. If you're only using the standard class, it might be time to review your workload and re-evaluate how often your data is being accessed. Many companies use standard tier storage for archival purposes and can reduce their spending by leveraging nearline or coldline layered storage. And in some cases, if you're keeping objects for cold-storage use cases like legal discovery, the new archival class can save even more.

Lifecycle policies

Not only can you save money by using different storage classes, but you can also make it happen automatically with object lifecycle management. By configuring a lifecycle policy, you can programmatically set up an object to adjust its storage class based on a set of conditions — or even delete it altogether if it's no longer needed. For example, imagine you and your team analyzed the data during the first month when it was created; other than that, you only need it for regulatory purposes. In that case, simply set a policy that throttles your storage to coldline or archive after the object reaches 31 days.

Deduplication:

Another common source of waste in hosting environments is duplicated data. Of course, there are times when it's necessary. For example, you might want to replicate a dataset across multiple geographies so that teams can access it quickly. However, in my experience working with clients, a lot of duplicate data is the result of lax version control. As a result, replicas can take up space and be expensive to manage.

Fortunately, there are many ways to prevent duplicate data, as well as tools to prevent data from being deleted by mistake.

- If you are trying to maintain data resiliency, you can use a multi-region bucket rather than creating multiple copies in different buckets. With this feature, you will enable geo-redundant for stored objects. This will ensure your data is copied asynchronously across two or more locations.

- You can set up object versioning policies to ensure you have the right amount of copies. If you're still worried that one copy isn't enough to store it in case it gets lost, you might consider using the bucket lock feature, which ensures that items aren't deleted before a specific date or time.

Tune your data warehouse

Businesses of all sizes come to BigQuery for modern data analysis methods. However, some configurations are more expensive than others. Take a quick test of your BigQuery environment and set up some protections to help keep your costs down.

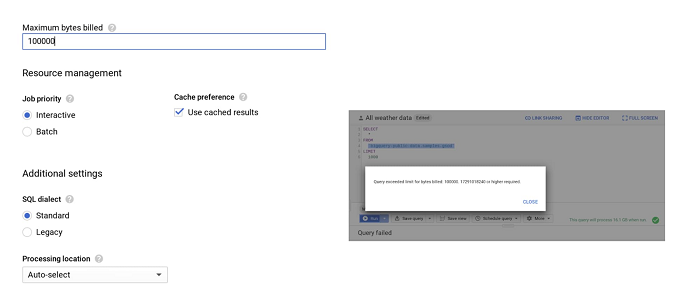

Control enforcement:

To limit query costs, use the maximum bytes billed setting. Exceeding the limit will cause the query to fail, but you won't be charged for that either, as shown below.

Along with enabling cost control at the query level, you can also apply the same logic to users and projects.

Using partitioning and clustering:

One benefit of partitioning is that BigQuery automatically reduces the price of stored data to 50% for every 90-day unmodified partition or table, by moving that data into permanent storage. It's more cost-effective and convenient to keep your data in BigQuery than having to move data into lower-level storage. There is no degradation in performance, durability, availability, or any other functionality when a table or partition is moved to permanent storage.

Check the streaming inserts:

You can load data into BigQuery in two ways: as a batch load or stream in real time, using streaming inserts. When optimizing BigQuery costs, the first thing to do is check your bill and see if you're being charged for streaming inserts. And if so, ask yourself: “Do I need the data to be available immediately (seconds instead of hours) in BigQuery?” and “Am I using this data for any real-time use cases once the data is available in BigQuery?” If the answer to either of these questions is no, then we recommend switching to batch load data, and it's free.

Using Flex Slots:

Due to rapidly changing business requirements, Google introduced Flex Slots, a new way to buy BigQuery positions with durations as short as 60 seconds, in addition to monthly and yearly fixed rate commitments. With this combination of on-demand and fixed-rate pricing, you can respond quickly and cost-effectively to changing analytical needs.

Network packet filtering

In fact, Google Cloud comes with a number of tools that can give you visibility into your network traffic (and accordingly costs). Additionally, you can make some quick configuration changes to reduce your network costs.

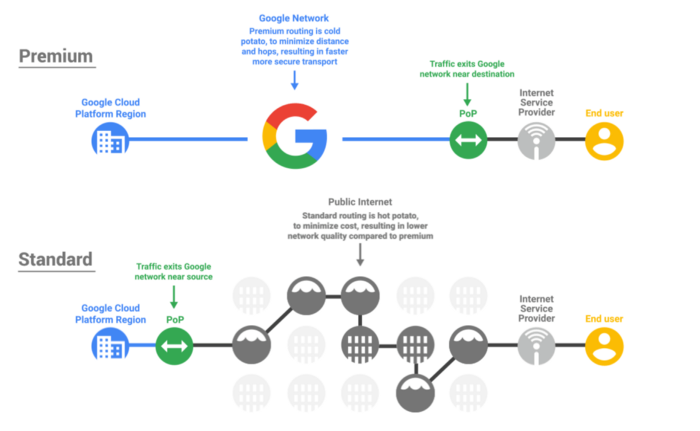

Network service levels:

Google Cloud lets you choose between two levels of network service: premium and standard. For great global performance, you can opt for the premium tier, which has always been the tier of choice for Google Network. The standard tier offers lower performance, but can be a suitable replacement for some cost-priority workloads.

- Cloud Logging: You may not know it, but you have control over network traffic visibility by filtering out logs that you no longer need. Check out some common examples of logs that you can safely exclude. The same applies to Data Access audit logs, which can be quite large and incur additional costs.

Through ways to optimize the cost of using Google Cloud, businesses can equip themselves with custom configurations according to business needs and purposes. Optimizing usage costs can help proactively allocate investment costs to own resources that effectively serve the development of your business.

Contact Gimasys for advice on a transformation strategy that is right for your business situation and to experience the free Google Cloud Platform service:

- Hotline: Hanoi: 0987 682 505 – Ho Chi Minh: 0974 417 099

- Email: gcp@gimasys.com